Google foobar, part iv: layin' bricks

written on February 6, 2023

This post is the fourth installment of the foobar challenge series. You can read the first one to learn more about foobar and see what sort of intro problems the challenge presents if you don't want any real spoilers.

WARNING: Obviously, MAJOR spoilers ahead, for the problems themselves and possible solutions or hints. Even if you're attempting one of these and feel completely stuck, give it another go before reading the rest. Keep reading only if you don't mind getting spoiled!

Problem statement

This one was kinda hard for me, and a lot of fun too. It's about laying bricks (no, not that kind!):

Given a number \(n\), calculate the number of valid unique staircases that can be built with \(n\) bricks. For a staircase to be considered valid, it has to have at least two steps, and each step must be taller than the previous one.

The minimum value of \(n\) is \(3\), and the maximum is \(200\).

Now, what does this mean? Let's take the case of 3 bricks (which coincidentally is the smallest

amount that's able to produce a valid staircase). There is only one possible way to construct a

staircase with 3 bricks (each # symbolizes a brick):

# # #

With 4 bricks, we still only have one possible staircase:

# # # #

We couldn't do this, for example, because then the two rightmost steps would be of the same height (so the staircase would be invalid):

# # # #

With 5 bricks, there are actually two ways we can build a staircase:

# # # # # # # # # #

And so on, you get the idea.

@@@@@@@@@@

@@@@@@@@@@

SECOND WARNING: If you want to solve this yourself, STOP HERE! Anything past this point is a spoiler of some part of figuring out the solution, or of the solution itself. You've been warned!

@@@@@@@@@@

@@@@@@@@@@

First ideas

If you've read any of the other posts then you know that I said that generally it's a bad idea

to try to construct the solution; constructing

in this case would be actually building

(or enumerating) every valid staircase, and just counting them up to get the solution.

While I stand by the idea that it's not great to try and solve the problem that way, I do also think that sometimes spending some time on constructing the solution can teach you valuable lessons about the nature of the problem, so that you can then go and solve it in some other way.

For this problem, I wanted to started simple: what if we only had to count staircases with exactly two steps? Let's start calculating how many 2-step staircases we have for a given number of bricks:

| Number of bricks | Possible 2-step staircases | Number of possible 2-step staircases |

|---|---|---|

| 3 | \((2, 1)\) | 1 |

| 4 | \((3, 1)\) | 1 |

| 5 | \((4, 1)\), \((3, 2)\) | 2 |

| 6 | \((5, 1)\), \((4, 2)\) | 2 |

| 7 | \((6, 1)\), \((5, 2)\), \((4, 3)\) | 3 |

| 8 | \((7, 1)\), \((6, 2)\), \((5, 3)\) | 3 |

| 9 | \((8, 1)\), \((7, 2)\), \((6, 3)\), \((5, 4)\) | 4 |

The way I found out the formula

for this was by imagining that we start with all the

bricks in one pile, and then we form the different staircases by moving one brick at a time

from the pile to a new pile to its right. For example, for 7 bricks:

$$(7, 0) \rightarrow (6, 1) \rightarrow (5, 2) \rightarrow (4, 3)$$

Of course, the first version doesn't count (we can't have a 0-brick step), so we have 3 staircases. We know when to stop because putting one more brick on the second pile would make it bigger than the first pile, which would be an invalid staircase (or a mirrored duplicate of \((4, 3)\), whichever way you prefer).

But actually… we don't have to iterate and manually build the staircases in code in order to count them! The most amount of bricks we can move to the right is essentially half the bricks, or \(\lfloor \frac{n}{2} \rfloor\). But that's not entirely correct either: for \(n = 6\), if we move \(\lfloor \frac{6}{2} \rfloor = 3\) bricks to the right we get \((3, 3)\), which is not a valid staircase. So, the correct answer is that we can move \(\lceil \frac{n}{2} \rceil - 1\) bricks to the right. That's how many 2-step staircases we can have for any given \(n\).

Great! So there must be a similar formula for 3-step staircases, 4-step staircases etc, and then we can just sum everything and bingo! Right? Right???

Uh… maybe? I couldn't find a similar formula at all for a 3-step staircase: the problem is much more complex, because you can now move bricks to two new columns, and where you move them influences where you can move future bricks. Furthermore, even if we found one, we would need to find one for 4-step, 5-step, and possibly \(n\)-step staircases, and then we would also need to know what the maximum number of steps is for a given \(n\) (which is not simple to calculate either).

I got stumped by this, in fact I got so stumped that I went back to trying to construct staircases. I won't describe every solution I tried in detail, but basically the first concrete (there's a brick pun somewhere here I guess?) idea I had was to iteratively build all staircases for every number of bricks from \(3\) up to \(n\), and then to use pre-existing staircases if possible to complete the 2-step ones. For example, for \(n = 6\), you can construct the 2-step ones using the algorithm I gave before, and then remark that you can also make a 3-step one by using \(3\)'s \((2, 1)\) staircase and putting the remaining bricks in front of it, to make \((3, 2, 1)\). In code, it looks like this:

solution.py

import math

def staircases_iter(n):

res = {}

for nb_bricks in range(3, n + 1):

res[nb_bricks] = []

# Compute the 2-step staircases.

for j in range(1, int(math.ceil(nb_bricks / 2.0))):

res[nb_bricks].append((nb_bricks - j, j))

# Build n-step staircases out of the previous ones.

for j in range(3, n - 2):

for substaircase in res[j]:

if substaircase[0] < i - j:

res[nb_bricks].append((i - j, *substaircase))

return res[n]

A dictionary is used as a sort of memoization data structure

that holds the results of

the previous \(n\) values. The reason why the second loop (on line 14) goes up to \(n - 3\) is

because the first 3-step staircase starts with 3 bricks (\((3, 2, 1)\)), so it makes no sense

to attempt to build an \(m\)-step staircase (with \(m \geq 3\)) while starting with less than 3

bricks.

This is, pretty surprisingly, one of the fastest construction methods I came up with—and it's \(O(n^2)\). It can't handle big inputs, of course; this is even Python 3 actually, which means that it's going to be slightly faster:

$ shell

$ time python3 solution.py 100 # 100 bricks 444792 python3 solution.py 100 1,80s user 0,24s system 99% cpu 2,039 total $ time python3 solution.py 120 # 120 bricks 2194431 python3 solution.py 120 10,98s user 1,09s system 99% cpu 12,073 total

The numbers are right, but at 100 bricks it's already taking over 2 seconds, at 120 bricks it's over 12 seconds, and at 150 bricks my machine killed the process before it could end. Clearly, this is not the way to do it.

At this point, I was trying to optimize this idea: we're wasting enormous amounts of time

looking through every possible substaircase while a small part of them is really available to

use for a given \(n\). I thought, maybe you can just break

out of that second loop when you come across the first substaircase you can't use? Nope, can't

do that: for \(n = 13\) for example, you would reject 7's \((6, 1)\) pair as a substaircase

(because \((6, 6, 1)\) is not a valid staircase), but you would accept 8's \((4, 3, 1)\)

(because \((5, 4, 3, 1)\) is a valid staircase). There's no possible way to order them

by number of bricks that wouldn't also make you break too soon and miss one or multiple solutions.

However, there might be some way to globally sort them: I think there is, by assigning

them each a score of (n - sum(substaircase)) / substaircase[0] (I won't explain in

detail how I got it but I think it's easy to figure out), but unfortunately that's only going

to be valid for a given \(n\), which means you need to sort them on every iteration if

you take the previous staircases_iter algorithm. While

this cuts down on the amount of iterations, it adds complexity via sorting, and overall I think

the performance is about the same—if not worse, as comparison-based sorting can't be

faster than \(O(n \lg(n))\), bringing the total to \(O(n^2 \lg(n))\).

Identifying the fundamental operations

Let's take a couple of steps back at this point, and rethink our strategy. We've based the

previous algorithms on two fundamental operations

:

- Moving a brick from the first column to the second (for 2-step staircases);

- Using a preexisting substaircase (for \(m\)-step staircases, \(m \geq 3\)).

What if we found a set of fundamental operations, that would allow us to create any \(m\)-step staircase, and that look kind of like the operation of moving bricks?

Well… let's try to see what operations we need for the first \(n\) that gives birth to a 3-step staircase, \(n = 6\):

-

We can generate all 2-step staircases (\((5, 1)\), \((4, 2)\)) using the

move brick from first column to second column

operation; - We can also generate the 3-step staircase by taking the \((4, 2)\) staircase, and moving a brick from the first column to a new column: \((3, 2, 1)\).

It's this very insight that helped me move forward: you can build any staircase, starting from \((n - 1, 1)\), with these two operations, which we'll clearly define here:

- Move a brick from the first column to the last column;

- Move a brick from the first column to a new column on the right.

This is all you need; you can try it out with any \(n\). How do we implement it though? I first tried to express this as a binary tree, where the left child would apply (1) on the parent and the right child would apply (2) on the parent until they reach an invalid staircase. While it did work, I found that it was slow because it was spending way too much time navigating the tree (e.g., going up hundreds of nodes to explore another subtree on the complete other side of the tree).

Eventually, I came up with another implementation that finally managed to be faster than the previous algorithm: we can think of \((n - 1, 1)\) as a seed, and we can apply the operations (1) and (2) as mutations to that seed (can you tell I've been reading papers on fuzzing?). Then, if the results of the mutations are valid staircases, we can add them to the seed pool and let them be mutated further, until no more valid mutations can be produced. It looks something like this in code:

solution.py

def seeder(n):

if n == 3:

return 1

seeds1 = [[n - 1, 1]]

seeds2 = []

count = 1

while seeds1 or seeds2:

if seeds1:

mut1 = seeds1.pop()

mut1[0] -= 1

mut1[-1] += 1

count += 1

if mut1[0] > mut1[1] + 1:

if (len(mut1) == 2 and mut1[0] - 1 > mut1[1] + 1) or (

len(mut1) > 2 and mut1[-2] > mut1[-1] + 1

):

seeds1.append(list(mut1))

if mut1[-1] > 1:

seeds2.append(list(mut1))

if seeds2:

mut2 = seeds2.pop()

mut2[0] -= 1

mut2.append(1)

count += 1

if mut2[0] > mut2[1] + 1:

if (len(mut2) == 2 and mut2[0] - 1 > mut2[1] + 1) or (

len(mut2) > 2 and mut2[-2] > mut2[-1] + 1

):

seeds1.append(list(mut2))

if mut2[-1] > 1:

seeds2.append(list(mut2))

return count

This kinda looks like a mess, but it's actually not that complicated: seed1 and seed2

correspond to the seed pools for the two mutation operations I described before. Separating

them into two different seed pools makes things a little bit faster because they only work on

seeds that they actually can mutate; operation (2) can't mutate seed \((5, 3, 1)\) for

example, but operation (1) can (creating \((4, 3, 2)\)).

The big ifs on lines 16-22 and 30-36 are simply guards to

guarantee that the mutations that will be created on the next round will be valid.

Alternatively, you can check if every mutation is valid after its created, but I think

that's a little bit slower. We also don't keep track of what the staircases are, we

only keep track of their count; that also saves us a bit of time.

Let's time it, this time with Python 2:

$ shell

$ time python2 solution.py 100 # 100 bricks 444792 python2 solution.py 100 0,21s user 0,00s system 99% cpu 0,213 total $ time python2 solution.py 120 # 120 bricks 2194431 python2 solution.py 120 1,00s user 0,00s system 99% cpu 1,007 total

Wow, much faster! Unfortunately though, the upper limit (200 bricks) still takes around 2 minutes on my machine (which of course is unacceptable for the challenge). I spent quite a bit of time thinking of how to optimize this further; I don't wanna do the math, but I'm pretty sure this is as low as you get time-complexity-wise while being able to actually enumerate and save all of the staircases.

At this point, I was ready to bite the bullet and accept that the only way this would get faster, fast enough to complete the challenge, was to find a formula for the count that doesn't simply enumerate every staircase like all of the methods I described up to this point.

Looking for something more \(O(n)\)-y

Thinking of the problem in a more mathematical

way instead of an engineering way made me

realize that you can get the same exact problem by asking: given an integer \(n\), how many

ways are there to express it as a unique sum of unique positive integers?

If you think about it, the "unique sum" part takes care of eliminating the mirror

staircases (i.e., if you count \((4, 3)\) you can't also count \((3, 4)\)), and the unique

positive integers

part takes care of the validity rules for the steps (e.g., you can't

count \((2, 2)\), since you're reusing the same integer twice). Expressing the problem this way

gives us this for \(n = 8\), for example:

$$

\begin{matrix}

8 & = & 1 & + & 7 \\

8 & = & 2 & + & 6 \\

8 & = & 3 & + & 5 \\

8 & = & 1 & + & 2 & + & 5 \\

8 & = & 1 & + & 3 & + & 4 \\

\end{matrix}

$$

Of course, there's also \(8 = 8 + 0\), but since we said that it's a sum of positive integerS we can discard that solution.

I think this is where I kind of took a wrong turn and started to think of this as the wrong

kind of combinatorics problem, like a \(x\) choose \(y\)

problem. Eventually, I

discovered stars and

bars and then the twelvefold way

(which has an amazingingly intuitive explanation on its Wikipedia article by the

way!), and tried to express it that way.

It kinda works for 2-step staircases? You basically end up with the same formula as the one we

found at the beginning (\(\lceil n / 2 \rceil - 1\) possible 2-step staircases). However, as

soon as you try to describe 3-step staircases it becomes confusing, because you have to limit

your choices of placing bars

between stars

based on the choices made with the

previous bars. I think I eventually found a formula (I honestly don't even know where it is in

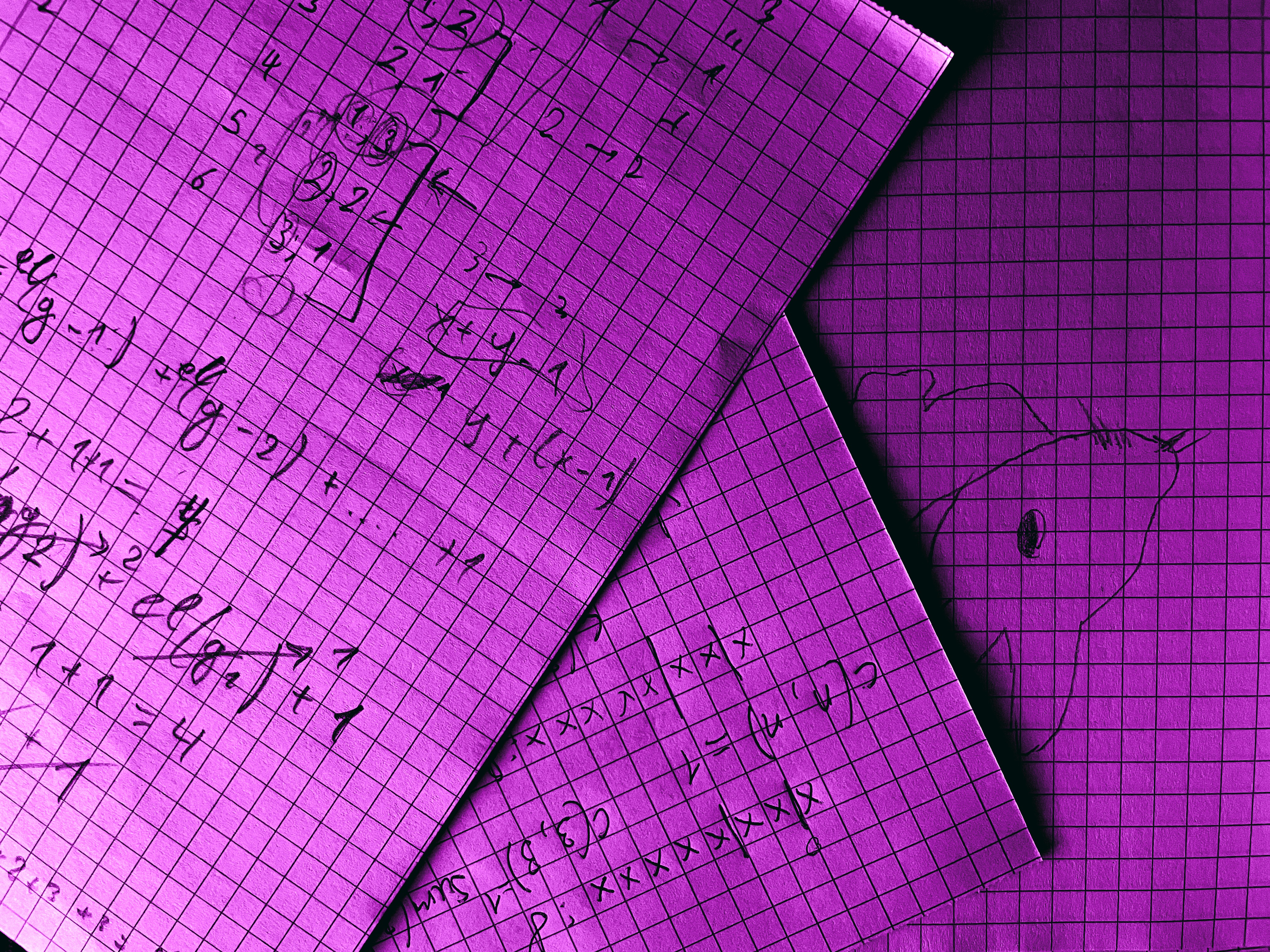

the mess of papers I made trying to figure this out), but it doesn't account for symmetries, so

the result is kinda useless: you get double

the staircases, except it's not that simple,

because for example a 3-step staircase can be expressed in 6 different ways if you don't filter

out the symmetries (because \(3! = 6\)). So the result is completely useless, because you don't

know how many of the symmetries are from 2-step staircases, 3-step staircases, 4-step

staircases and so on, so you don't know how to filter them out. Plus, you have the same problem

of not knowing at what \(m\) of \(m\)-step staircases to stop at for a given \(n\).

It's useless to try to solve this like a \(x\) choose \(y\)

-type combinatorics problem

in my opinion, because you can't just choose any positive integer; basically, you

can't fit that requirement of the sum has to equal \(n\)

in the \(x\) choose

\(y\)

expression.

Solution

My next idea was the one that finally did it: a sort of recursive formula, where basically you

make a choice for an integer (or a step, in the bricks version of the problem) and set it

aside

, and then work on the remaining problem.

It works by first trying to find all the possible sums that express \(n\), starting at \(1\). Those are composed by the sums expressing \(n - 1\) starting at \(2\) (1 is already taken), plus those expressing \(n\) starting at \(2\). The second one is needed because here we also take \(n\) itself as a possibility (I'll explain why later); we can of course correct this by just subtracting \(1\) from the final result.

Let's take a concrete example: \(n = 3\). Assume \(C(n, k)\) is the count of unique sums of unique positive integers equal to \(n\), starting from integer \(k\), that we defined earlier. We have: $$ \begin{matrix} C(3, 1) & = & C(2, 2) & + & C(3, 2) & & \\ & = & 1 & + & C(1, 3) & + & C(3, 2) \\ & = & 1 & + & 0 & + & 1 \\ & = & 2 & & & & \\ \end{matrix} $$

As we said, we need to subtract \(1\), so we end up with \(C(3, 1) - 1 = 1\), the correct answer for \(n = 3\).

The reason why we need that second \(C(n_i, k_{i - 1} + 1)\) term can become apparent for larger numbers, like \(n = 6\):

: 1 and : 0 are

the ones with a trivial solution.

You can see that it's that second term that discovers the staircase \((2, 4)\) essentially, on

the left child of the right child of the root (the right child of the right child of the root

just discovers \((6, 6)\) which we're throwing out anyway). If you don't exactly get it, you

can think of sums(6, start=2) as if we pick 2 as the

first number, what sums can we make with the rest of the numbers?

.

So, the idea is to implement this as a recursive function:

solution.py

def sum_counter(n, start=1):

if n == start:

return 1

if n < start:

return 0

res = sum_counter(n - start, start=start + 1) + sum_counter(n, start=start + 1)

return res

So simple! There are two trivial cases we use to stop the recursion:

-

(k, start=k): there is exactly one way to expresskas a sum starting atk. -

(k, start=m),k < m: there is no way to expresskas a sum using numbers greater than itself.

Unfortunately, this solution also suffers from a bad case of not fast enough:

$ shell

$ time python2 solution.py 100 # 100 bricks 444793 python2 solution.py 100 0,93s user 0,00s system 99% cpu 0,927 total $ time python2 solution.py 120 # 120 bricks 2194432 python2 solution.py 120 4,95s user 0,00s system 99% cpu 4,947 total

That's actually considerably slower than the seeder

solution! Not to worry however, because this one can be saved with some good ol' dynamic

programming.

Take another look at the call graph for \(n = 6\); it all essentially comes down to trivial solutions, which are either \(1\) for \(C(i, i)\), or \(0\) for everything else. We can construct a 2D array, where one dimension will be \(n\) and the other will be the starting number, \(k\). We can fill the diagonal with \(1\)s and every other cell with \(0\)s, and then we can build the rest of the valid cells starting from \(C(n, n)\) working backwards. We can then have a second loop that starts at \(3\) and goes up to the real \(n\) we want to find; that way, every step is \(O(1)\), making the final solution \(O(n)\), which is exactly what we wanted!

This is how I implemented it:

solution.py

MAX_BRICKS = 200

def sum_counter_dynamic(n, start=1):

memo = []

for i in range(MAX_BRICKS):

memo.append([])

for j in range(MAX_BRICKS):

if i == j:

memo[i].append(1)

else:

memo[i].append(0)

for bricks in range(3, n + 1):

for first_step in range(bricks - 1, 0, -1):

memo[bricks - 1][first_step - 1] = (

memo[bricks - first_step - 1][first_step] + memo[bricks - 1][first_step]

)

return memo[n - 1][0] - 1

And sure enough, this is blazingly fast compared to anything else we've seen:

$ shell

$ time python2 solution.py 100 # 100 bricks 444792 python2 solution.py 100 0,01s user 0,00s system 98% cpu 0,012 total $ time python2 solution.py 120 # 120 bricks 2194431 python2 solution.py 120 0,00s user 0,01s system 97% cpu 0,011 total $ time python2 solution.py 200 # 200 bricks 487067745 python2 solution.py 200 0,01s user 0,00s system 98% cpu 0,013 total

Hooray! I was so happy to see this work, and I think this solution is quite elegant all things considered, even though we went through one hell of a ride to find it.